Building Agents: A 3 Year History

Where we were, where we are, and where (I think) we're going

The incredible thing about building AI agents is that very few people, if any, have more than 3 years experience applying LLMs to product engineering. Especially when it comes to what we call “agents”.

November 30, 2022: First Contact

It’s wild, but I remember where I was at when I first tried ChatGPT. I was waiting for a play to start. Sitting with my wife and parents, I decided that we should test out this thing that everyone was talking about. The first message I sent was to write a poem about my brother in the voice of a somewhat well-known public figure.

Even at the time, I didn’t realize where things would be heading.

Shortly after that, my cofounder and I decided to build one of the hundreds of Text-to-SQL startups that spun up during 2023.

2023: The year of figuring it out

Everyone was trying to figure out what the limits of LLMs were. We were figuring out what the best patterns of building with them were, the system designs, all that good stuff.

A good example of this is Langchain. Their Github project took off like nobodies business. After a year, most serious AI engineers deemed it useless. In fact, Langchain ended up launching a new project called Langflow, which was basically a rewrite of the original project.

There were a few challenges for most companies working with AI during the beginning half and most of 2023.

Dealing with Small Context Windows

At the start of 2023, the best model available via API was OpenAI’s text-davinci-3 model. It had a context window of 4096 tokens. Compared to context windows of 1 million only 3 years later.

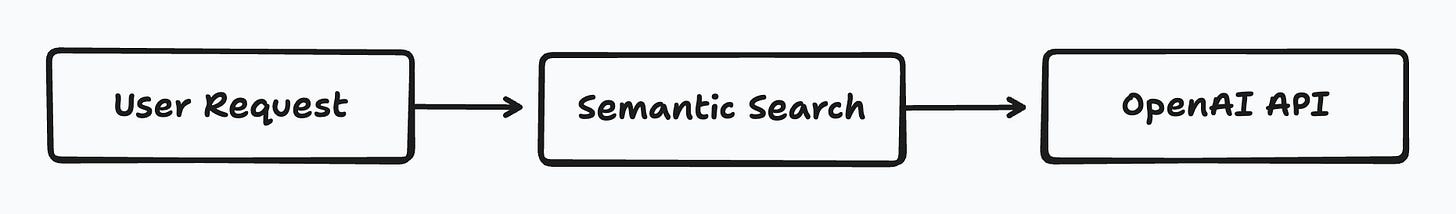

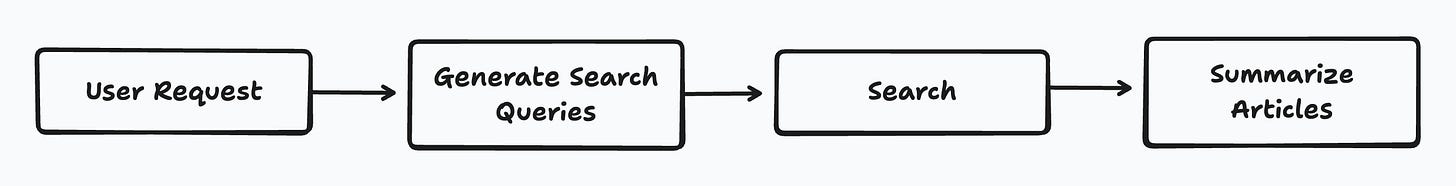

This made it challenging to include sufficient context for an LLM to actually succeed at tasks. This is where RAG became extremely popular and basically lit the fire under the Vector DB craze of 2023. Since it was impossible to include a large document in context, engineers would use semantic search in conjunction with a vector database to pick out chunks that were deemed relevant from the user request. Most applications looked like this:

The lack of context also made it very difficult to provide “follow up” functionality. Most applications were constrained to one-shot attempts at tasks with no context of previous actions, conversation, etc.

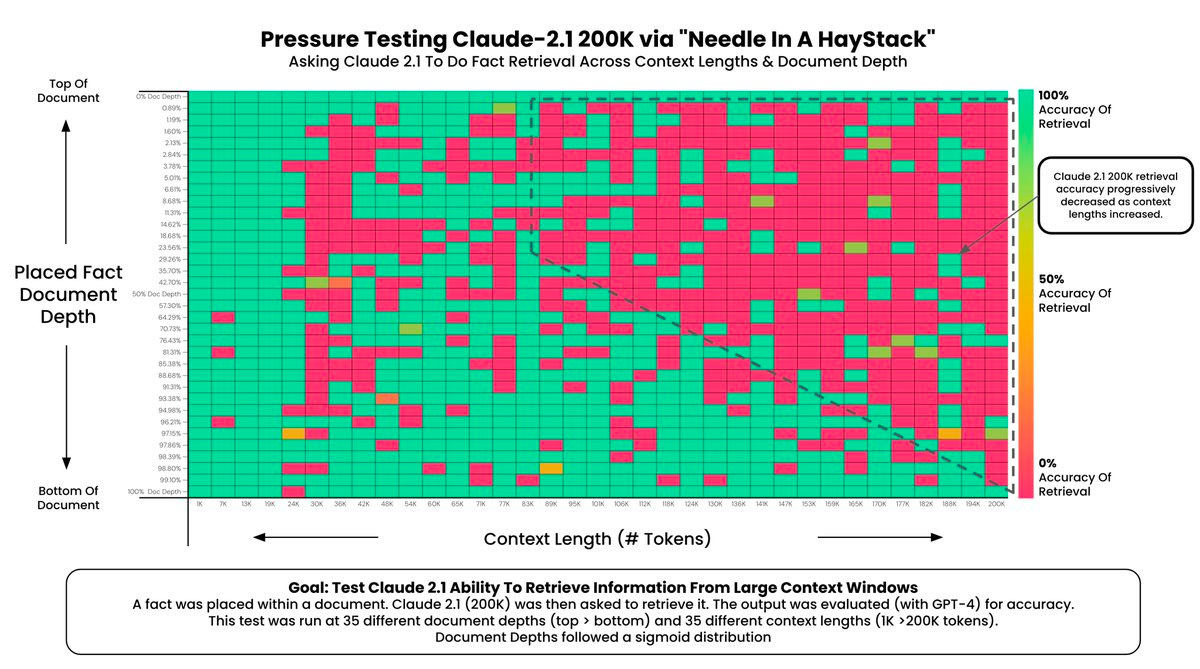

By the end of the year, the 128k gpt-4-turbo and 200k claude-2 models were released. While they allowed you to stuff more context, it actually didn’t solve the problem.

One-shot tasks felt like the limit

Even when given perfect (and sufficient) context, the models during 2023 weren’t actually that good at most tasks.

Most of the “large context” models would struggle with needle-in-the-haystack problems. If the answer or solution was nested somewhere in lots of context, the agent would likely just miss it.

Aside from retrieval accuracy, the models general performance on tasks would decrease as context grew. This is even true to this day, but in a much smaller fashion.

Before function calling and JSON mode were released during the year, it was also difficult to get the LLMs to output in a consistent and reliable way.

All of this ultimately made it difficult to get the LLMs to do anything useful outside of one-shot tasks like writing tasks.

Cursor: An interesting performance hack

Cursor was able to break the performance and reliability issues by fine-tuning a “fast apply” model. At this time, I would have been skeptical of an LLM writing a structured payload to apply edits to my files.

So, as a workaround, Cursor would treat LLMs as a conversation layer and then feed the response from the LLM into their fast apply model. This model would rewrite the entire file, but with the recommendations/context of the conversation the user is having with GPT-4 or any of the SOTA models at the time.

I would attribute their early success and traction to this engineering decision that ultimately made them one of the few AI products that were actually useful during this time.

2024: The year of the workflows

At this point, LLMs applications started to see some promise when you would organize them into a DAG-like structure. The idea was to decompose a larger goal into many small tasks/steps. This gave you the ability to engineer the context, prompt, logic, etc. into a node with the goal of accomplishing that small step.

The idea was that if you could nail every node, then the integrity of the workflow would hold up.

Organizing context and dependencies

As the number of nodes/steps increased in your workflow, it became very difficult to orchestrate general context and the context of the workflow into each progressing step.

Commonly, the input of a given node would be dependent on context of a different step. There were many cases where you would need to build in mechanisms that would trigger context retrieval in specific situations.

From an engineering perspective, this made the application very hard to manage. One, it introduced tons of edge cases where small details between steps were lost. Which degraded performance. Two, any new feature required extensive planning of how that would fit in, where the workflow would diverge, what dependencies were required, etc.

Multi-step tasks unlocked, but still limited

Models had gotten a lot better at their outputs and adhering to task instructions. However, if the task was too broad or required any sort of judgement, they were unreliable.

In our workflows, we had some gates where we would give the LLM a very rigid framework for making decisions and that worked alright, but broke down during complex requests.

Workflows tend to work somewhat well when you can explicitly state all the steps required to accomplish a task. When the number of steps required to accomplish a task are ambiguous and/or unpredictable, workflows reach their limits very fast.

During this year, we learned that chain-of-thought prompting could actually boost LLM performance somewhat significantly. By allowing the LLM to “think” about the task it was about to attempt to solve, you could feed that back into the input of the next LLM call and it improved task adherence and performance.

Perplexity: A workflow at its core

A perfect example of a workflow that gained traction quickly and was actually useful is Perplexity. Obviously, I’m oversimplifying all the details that go into building Perplexity, but this was their basic workflow:

This worked really well because a web search followed the same steps 95% of the time.

2025: The year of the agents

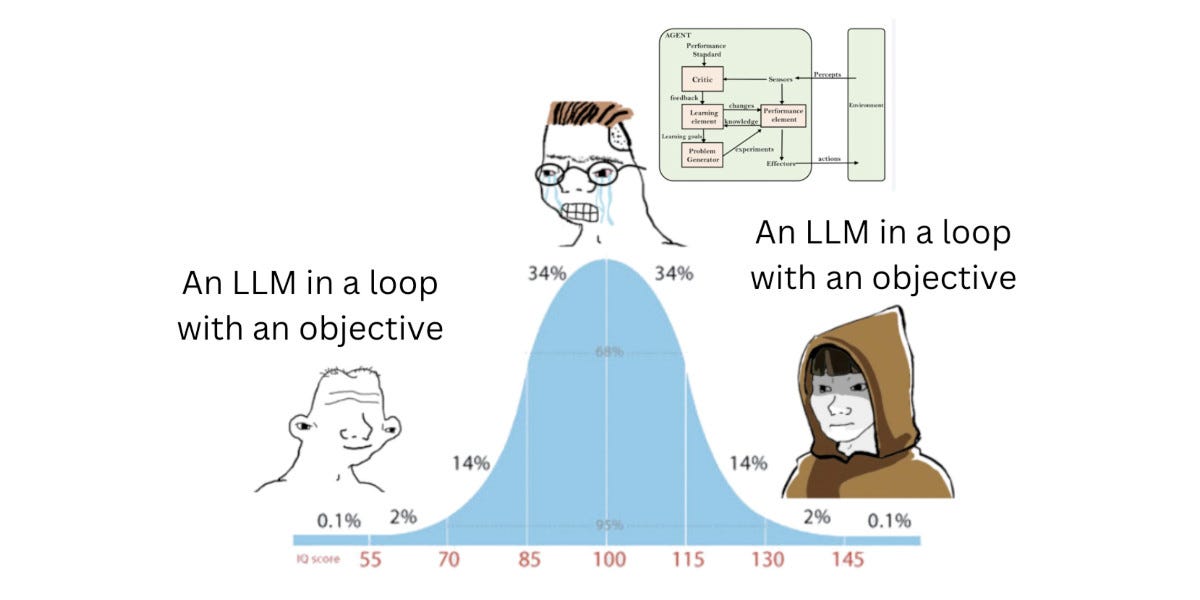

Everything we’ve described up to this point actually isn’t an agent. An agent in its simplest form is a for loop that includes an LLM, essentially letting the LLM recursively work on a problem until it deemed it finished.

There had been many attempts at doing this with the AutoGPT project being one of the first attempts. Additionally, you had projects like GPT Engineer which was the precursor to Lovable.

Before reasoning models, it felt like the models weren’t good enough at making decisions to run in a recursive fashion. Often going into “doom loops” where they would iterate endlessly on a task, failing over and over again at a given step.

We had glimpses of agents through the coding tools like bolt, Replit, v0, etc. These products have obviously benefitted from improved model capabilities, but initially their loop wasn’t too complex… Write code, run code, write code, run code… finished. I’m once again over-simplifying, but these tools often broke down in the face of complex applications.

However, the release of o1 from OpenAI at the end of 2024 was the first time that we actually started to see an LLM make intelligent decisions reliably.

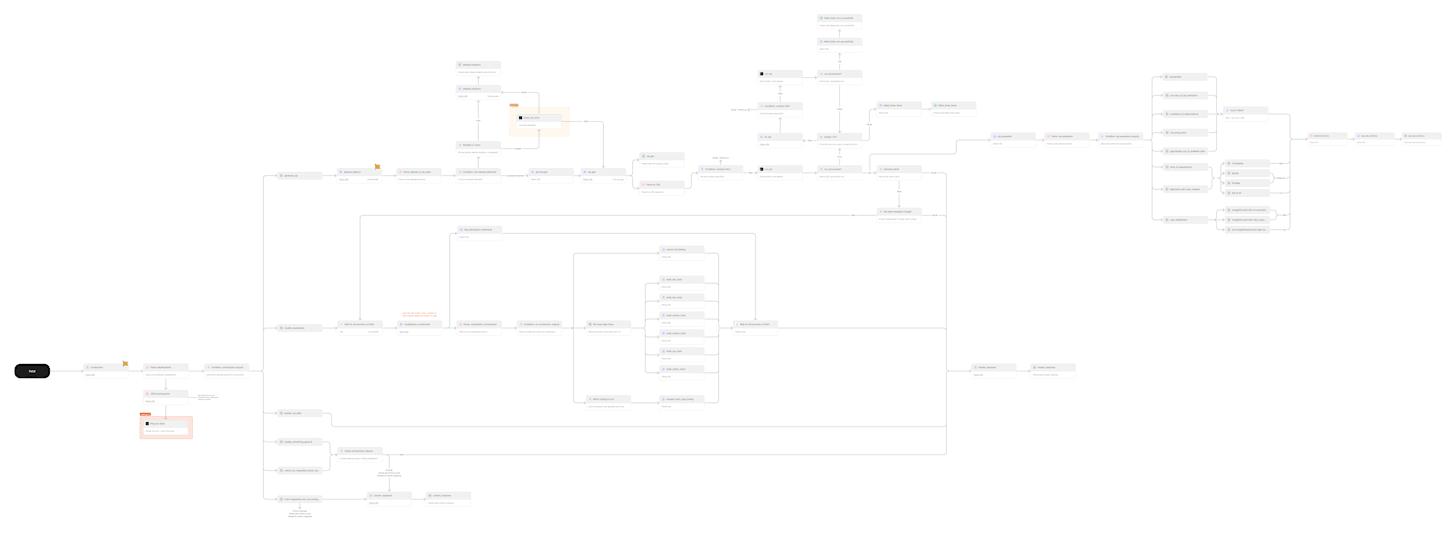

Building software for agents

As we transitioned our app from workflows to agents we realized that the rigidity we had built into each step and process actually decreased the agents ability to accomplish the task.

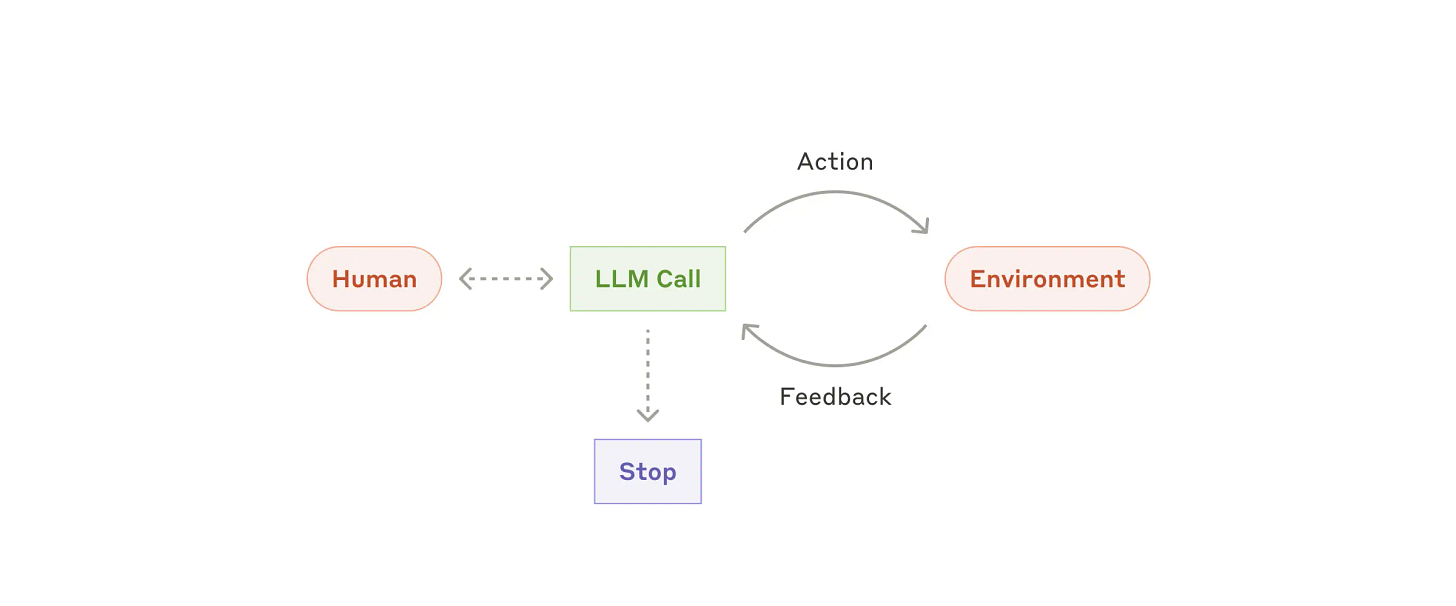

It felt like my job had actually shifted from building agents to building software for agents. Instead of writing logical steps, we started to think more about what are the building blocks (tools) and environment that the agent needs in order to accomplish a given task successfully.

Our product essentially turned into this:

Multi-agent systems

At the beginning of the year, it felt like the models still struggled on complex, multi-deliverable tasks.

In our case, with Buster, we had built out an AI Data Analyst product. This was an agent that needed to be able to take a user request, interpret it, skim through data warehouse documentation, explore nuances in the data, and ultimately output 1 or more visualizations to best answer the user request.

We found that for reliable outputs we still had to decompose the objective into smaller tasks and we would allow the agent to transition through “modes” in order to accomplish the task.

An example of this was going through an “exploratory” mode where the agent had access to tools to run sql, read through documentation, but ultimately it could not build visualizations. After thorough exploration, Buster would then transition into a “visualization” mode where it could build a chart, report, or dashboard.

If we combined them, we typically saw the agent ending its exploration early and jumping right into visualization creation.

As of now, the models have gotten good enough that we’ve combined them into one “Analyst” agent.

Manus AI: These models are good at coding

When it comes to designing tools and environments for agents, I tend to lean into how you can let the models use their native coding skills in tools.

I had seen that Manus AI was able to achieve some awesome features and flexibility by not using tool calls for APIs and instead just allowed their agent to write python that would hit the APIs in its code.

Instead of restricting the agent to predefined, rigid tool calls, their agent would instead, search for documentation, find it, build the code for it.

Anthropic released something about this recently.

2026: The problems to be solved

Ultimately, this whole article has been a pretty good demonstration of the bitter lesson. We did what we could at different times to achieve reliable performance, but ultimately as the general purpose models have gotten better, they’ve solved a lot of the problems.

A few problems I’m particularly interested in that still remain unsolved going into 2026.

Heuristics

As the models have gotten better and we transition from rigid steps to building blocks, agents need heuristics and patterns to match for given workflows.

Most of the general purpose agents are subpar at vertical workflows. Usually lacking the detail and nuance of using its provided tools and the environment that its working in.

Coding has been a great beachhead for agents because a lot of the context, best practices, patterns, etc. are written and stored in Github. But there are still lots of business processes and daily workflows that have yet to be codified, making it pretty difficult for general purpose models to one-shot.

I’ll likely be spending most of my time next year diving deep into specific workflows, learning how the best do them, and codifying that into tools, environments, and heuristics for an agent to work with.

Memory

Lots of companies like ChatGPT and Anthropic have release memory features recently. In my circles, most people turn these off. Mainly due to the applications inability to start fresh on a given task. It’s a very difficult problem to decide when a previous memory is useful for the current task or not.

People seem to really like the “projects” or “spaces” products where they can provide context upfront and then when they want that context to be included in the chat, they just click into that space.

Adding in memory can also make workflow heuristics difficult. You may have designed your agent to accomplish a task perfectly, but what if a user likes to do it in a crappy way? They may want the agent to remember “their way of doing it” but that can often conflict with the correct way of doing something.

Autonomy

Most triggers that kick off agentive processes are rigid. Either a user firing off an ad-hoc request, a scheduled job, an event of some sort. As you dig into workflows and think about the ideal time for an agent to work on a task, you start to realize that there are many non-digital triggers.

Even if you get the triggers right, what context is actually relevant at a random point in time can be difficult to nail down.

Once again, referring to our AI Analyst Agent, I would love for Buster to be doing ad-hoc exploratory research for you in the background and spoon-feeding you data, but the reality is that there are many times that context, decisions, events, etc happen in the physical world.

Great insights! I know you open sourced some of your repo but I haven’t checked - Have you guys open sourced your agent loop?

I ask because where I work in fintech the agent directed loops produced utter garbage, and this is literally one week ago. Wasn’t until we scripted the path, inferred intermediate inputs, and really just scoped out the probabilistic DAG that we were able to get decent results.

I think the big differentiator is likely some form of automated feedback. Does the code compile, tests pass, etc. Not really possible to do that in our domain

Love this!